fluentd收集kubernetes 集群日志分析

文章目录

EFK (Elasticsearch + Fluentd + Kibana) 是kubernetes官方推荐的日志收集方案,我们一起了解一下fluentd是如何收集kubernetes集群日志的,庆祝一下fluentd从 CNCF 毕业。开始之前,希望你已经读过Docker 容器日志分析, 本文是其延生的第二篇。

注意 需要和ELK(Elasticsearch + Logstash + Kibana) 以及EFK(Elasticsearch + Filebeat + Kibana)区分,后一个EFK一般是原生部署。

CNCF , 全称Cloud Native Computing Foundation(云原生计算基金会),kubernetes也是其旗下,或者说大多数容器云项目都是其旗下。

部署EFK

k8s中部署efk,所用的yaml文件在 https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch ,你可以使用文章附录提供的脚本进行下载。

下载完成后执行 cd fluentd-elasticsearch && kubectl apply -f . 命令进行部署。

检查elasticsearch和kibana service:

|

|

检查fluentd DaemonSet:

|

|

这里我们知道了fluentd是以daemonset方式运行的,es和kibana是service方式。

注意 elasticsearch 默认部署文件是没有持久化的,如果需要持久化,需要调整其PVC设置。

fluentd 功能分析

-

查看fluentd的类型,没什么好说的

1 2 3 4 5apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-es-v2.2.1 namespace: kube-system -

查看fluentd日志收集

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27containers: - name: fluentd-es image: k8s.gcr.io/fluentd-elasticsearch:v2.2.0 ... volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: config-volume mountPath: /etc/fluent/config.d ... volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: config-volume configMap: name: fluentd-es-config-v0.1.6这里可以清晰的看到,fluentd以daemonset方式运作,然后把系统的

/var/lib/docker/containers挂载,这个目录我们在Docker 容器日志分析中介绍过,这是docker容器日志存放路径, 这样fluentd就完成了对容器默认日志的读取。fluentd的配置文件是以configmap形式加载,继续往下看看。

-

收集容器日志配置

收集容器日志主要在 containers.input.conf,如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20<source> @id fluentd-containers.log @type tail path /var/log/containers/*.log pos_file /var/log/es-containers.log.pos tag raw.kubernetes.* read_from_head true <parse> @type multi_format <pattern> format json time_key time time_format %Y-%m-%dT%H:%M:%S.%NZ </pattern> <pattern> format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/ time_format %Y-%m-%dT%H:%M:%S.%N%:z </pattern> </parse> </source>细心的你会发现挂载的容器目录是

/var/lib/docker/containers,日志应该都在这里,但是配置的监听的目录却是/var/log/containers。官方贴心的给出了注释,主要内容如下:1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29# Example # ======= # ... # # The Kubernetes fluentd plugin is used to write the Kubernetes metadata to the log # record & add labels to the log record if properly configured. This enables users # to filter & search logs on any metadata. # For example a Docker container's logs might be in the directory: # # /var/lib/docker/containers/997599971ee6366d4a5920d25b79286ad45ff37a74494f262e3bc98d909d0a7b # # and in the file: # # 997599971ee6366d4a5920d25b79286ad45ff37a74494f262e3bc98d909d0a7b-json.log # # where 997599971ee6... is the Docker ID of the running container. # The Kubernetes kubelet makes a symbolic link to this file on the host machine # in the /var/log/containers directory which includes the pod name and the Kubernetes # container name: # # synthetic-logger-0.25lps-pod_default_synth-lgr-997599971ee6366d4a5920d25b79286ad45ff37a74494f262e3bc98d909d0a7b.log # -> # /var/lib/docker/containers/997599971ee6366d4a5920d25b79286ad45ff37a74494f262e3bc98d909d0a7b/997599971ee6366d4a5920d25b79286ad45ff37a74494f262e3bc98d909d0a7b-json.log # # The /var/log directory on the host is mapped to the /var/log directory in the container # running this instance of Fluentd and we end up collecting the file: # # /var/log/containers/synthetic-logger-0.25lps-pod_default_synth-lgr-997599971ee6366d4a5920d25b79286ad45ff37a74494f262e3bc98d909d0a7b.log # -

日志上传到elasticsearch

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24output.conf: |- <match **> @id elasticsearch @type elasticsearch @log_level info type_name _doc include_tag_key true host elasticsearch-logging port 9200 logstash_format true <buffer> @type file path /var/log/fluentd-buffers/kubernetes.system.buffer flush_mode interval retry_type exponential_backoff flush_thread_count 2 flush_interval 5s retry_forever retry_max_interval 30 chunk_limit_size 2M queue_limit_length 8 overflow_action block </buffer> </match>这里注意一下其中的host和port,均是elasticsearch service中定义的,如果修改过需要保持一致。fluentd也支持日志数据上传到外部的elasticsearch,也就是前文的elk/efk原生。

附录

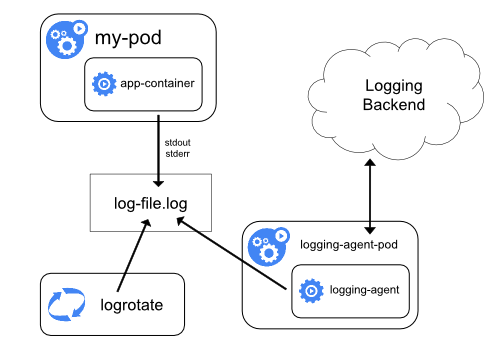

- 架构图

-

下载脚本文件 download.sh

1for file in es-service es-statefulset fluentd-es-configmap fluentd-es-ds kibana-deployment kibana-service; do curl -o $file.yaml https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/$file.yaml; done -

参考链接:

最后,欢迎加下面的微信和我互动交流,一起进阶:

文章作者 shawn

上次更新 2019-04-13